About

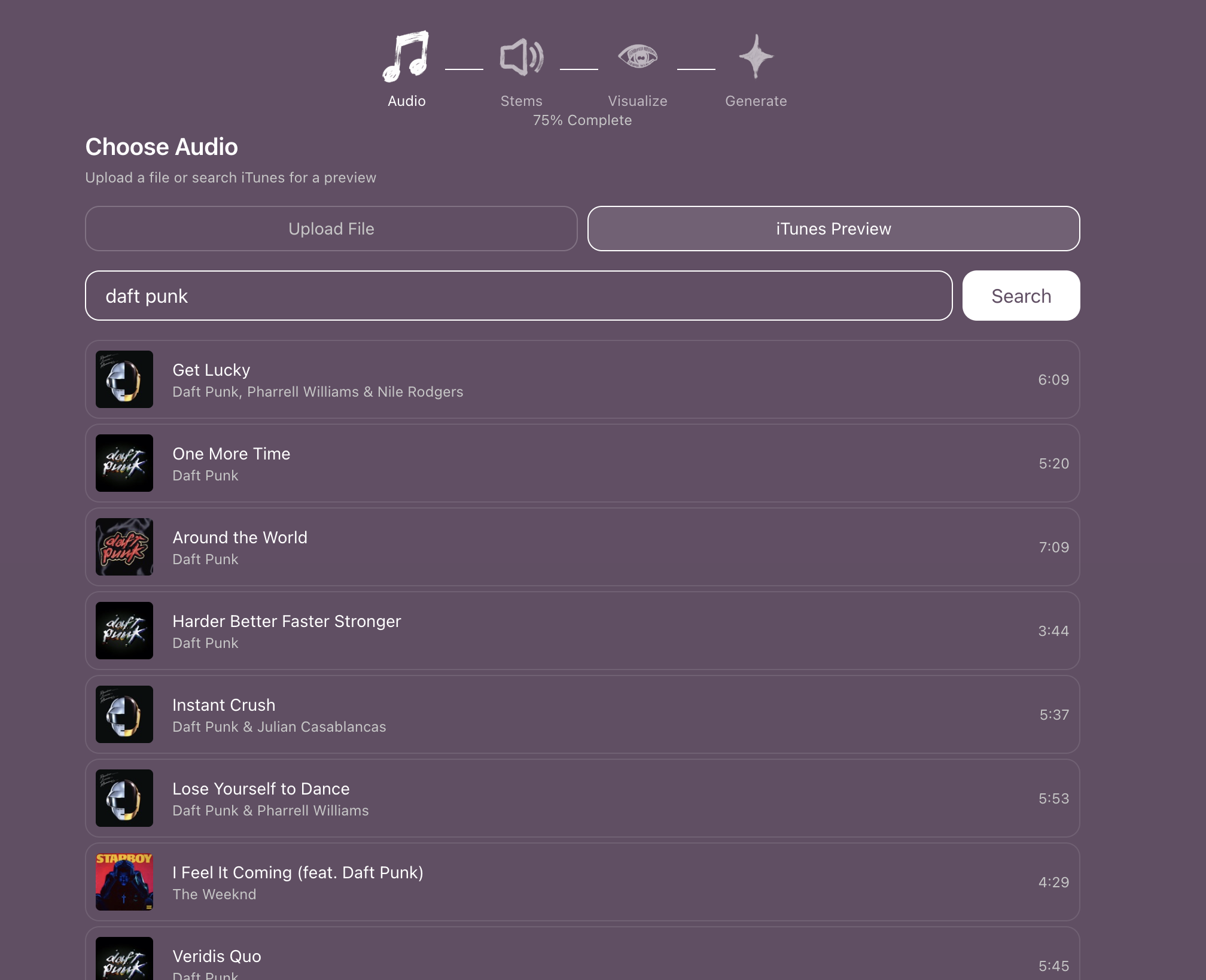

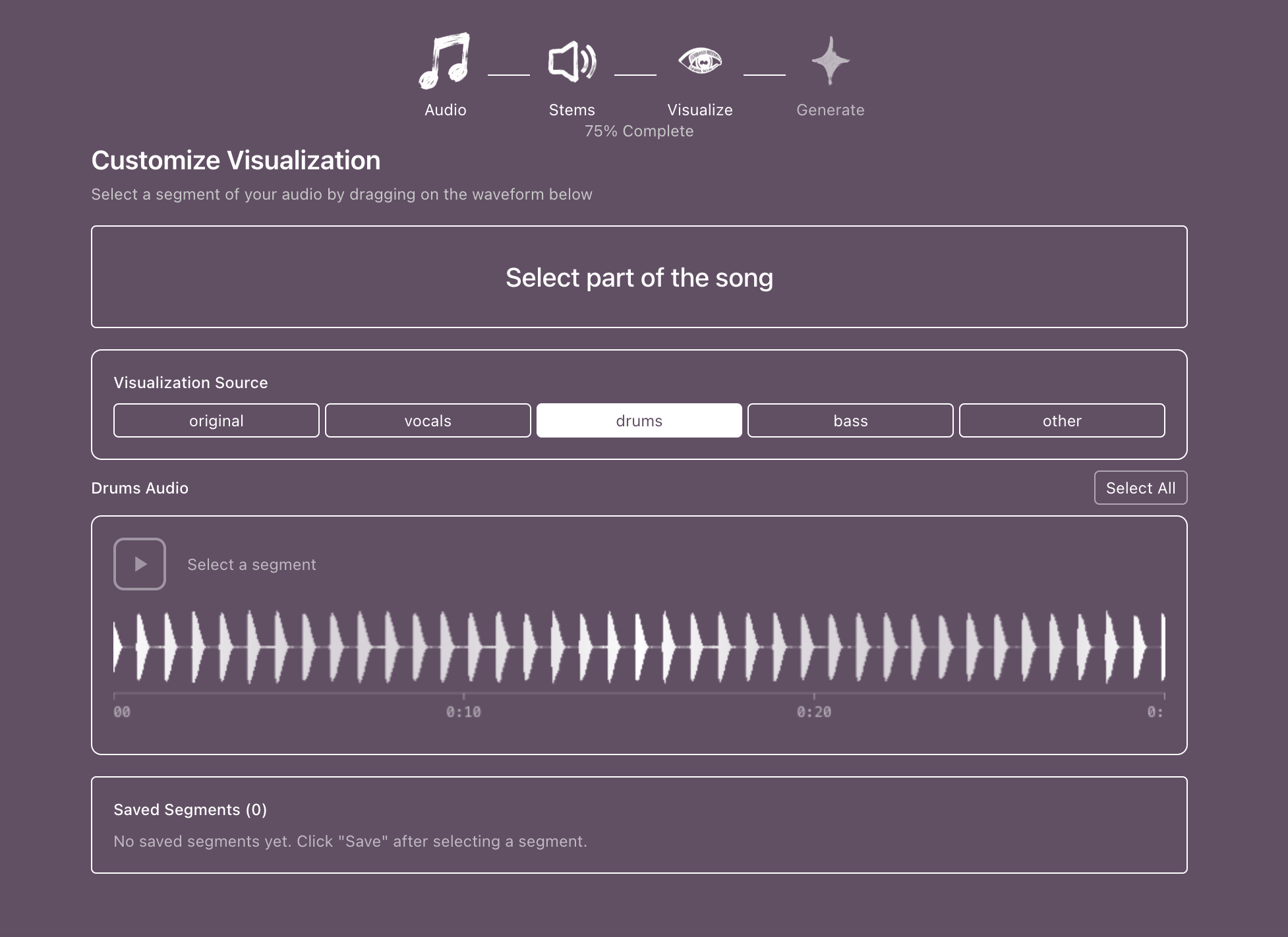

Music Video is a guided audio visualization and video generation experience using AI/ML algorithms and basic audio processing. The user is guided through picking a song and performing RMS audio analysis that is then used to drive the creation of a mask. The mask can then be additionally distorted to create more interesting shapes and patterns. This mask is then passed into a Wan 2.1 workflow along with a prompt to generate any sort of imagery the user can imagine pulsing to the music.

The mask and distortion are predictable, but there is an unpredictable nature to the generated visual. Wan 2.1 as a model is quite expressive, but it will occasionally struggle to match its expectations of representation to the mask that it is forced to use for generation. This causes the model to occasionally force an interpretation that is incredibly uncanny. Other times the form matches its expectations and it will generate appealing generations that dance in whimsical ways that the user may or may not have imagined.

Artist's Intention

This project explores the collaborative expressive power of a naive user and an AI model. It demonstrates the dynamic nature of the interaction of these human and computer systems. It is not a dissimilar experience that many people have when trying to generate a particular image or video with a model, where there is a slot machine nature to discovering the best output through brute force and iteration. This project serves as a more visceral example because it includes multiple expectations and when symbols do align it creates an experience of heightened alignment.

Gallery